There are many ways to add search functionality to a Rails application. While many Rails developers choose to use the native search functionality built into popular databases like MySQL and Postgres, others need more flexible or feature rich search functionality. ElasticSearch is probably the most well known option available but it has its own issues. Firstly, it is a resource hungry beast. To run ElasticSearch properly in production, you need a few beefy servers. Secondly, getting ElasticSearch to return relevant search results is not easy. There are many options to configure and knobs to turn. This adds a lot of flexibility, but takes away from simplicity.

Hosted alternatives like AWS Opensearch (formerly known as AWS Elasticsearch) or Algolia can take away a lot of painful hosting problems, but can become expensive very quickly.

For many use cases, there are other, simpler and cheaper alternatives. Typesense and Meilisearch are two that we tried for one of our internal projects at Cloud 66.

After some searching, trials and prototyping, we settled on MeiliSearch so I wanted to share the way we implemented search and integrated with MeiliSearch.

Native MeiliSearch Rails Integration

There is an official MeiliSearch gem with decent documentation you can use to simply index and search your ActiveRecord objects without much hassle. The way the native MeiliSearch / Rails integration works is by annotating your ActiveRecord objects with include MeiliSearch and adding a meilisearch block to your classes:

class Student < ApplicationRecord

include MeiliSearch

meilisearch do

attribute :name, :subject

end

end

Now you can search Student

hits = Student.search('physics')

While this is simple, elegant and very effective, our use case was slightly different so we needed to decouple our indexing and search from our basic domain objects.

Using MeiliSearch with an Admin App

A partial screenshot of MissionControl homepage

A partial screenshot of MissionControl homepage

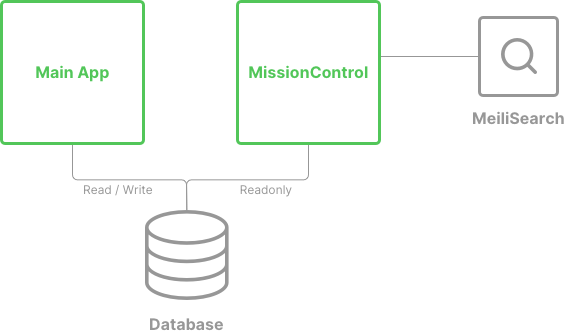

Internally, we wrote a tool called MissionControl, which helps our teams from sales to marketing to our customer advocates and engineers, support our business. Both of these apps are written in Rails and share the same database. However, the admin app (MissionControl) has readonly access to the database, while the main app handles around 2,000 database operations per second. On top of that, we didn't want to make changes and deploy the main app, every time we need to add a new field to our search index. All of these factors, as well as other security and availability requirements, meant that MissionControl had to take care of its own search functionality and not rely on the main application to keep the search indices up to date, which in turn, meant we couldn't use the native ActiveRecord-based update with MeiliSearch.

Cloud 66 main app and MissionControl

Cloud 66 main app and MissionControl

Our final design for adding MeiliSearch to MissionControl is as follows:

- A rake task in MissionControl finds all changed records and updates the index

- A second rake task in MissionControl finds all deleted records and removes them from the index.

- Both tasks are scheduled with CRONJobs to run regularly and frequently.

- We can remove the entire search index if needed and the next run will rebuild it from scratch.

Let's get deeper:

First, we need to instantiate a MeiliSearch client. This can be done in a Rails initializer:

require 'meilisearch'

$ms_client = MeiliSearch::Client.new(ENV.fetch('MEILISEARCH_URL') { 'http://127.0.0.1:7700' })

Indexing new and changed objects

Now on to the rake tasks. First the index task, responsible for finding updated database records and adding / updating their index:

desc "Indexes all docs"

task :run, [:name] => [:environment] do |t, args|

# only run one of these tasks at the time

is_running = $redis.get("mc_index_running")

if is_running == "1"

puts "Another index process is running"

next

end

begin

$redis.set("mc_index_running", 1)

BATCH_SIZE = 100

INDEX_NAME = :searchable

# this filters records that we don't want to index

class_filters = {

::User => -> (x) { x.account.nil? },

::Account => -> (x) { x.main_user.nil? },

# ... more classes here

}

# any class we want to index and the attributes on them we want indexed

classes_to_index = {

::User => [

:email,

:name,

:phone_number,

:updated_at,

{ company_name: ->(x) { x.account.name } }

],

::Account => [

:name,

:company_name,

:updated_at,

{ owner_email: -> (x) { x.main_user.email }},

],

# ... more classes here

]

}

index = $ms_client.index(INDEX_NAME)

# take the cutoff point

cutoff = Time.now.utc

puts "Cutting off at #{cutoff}"

filter = nil

if args.count > 0

filter = args[:name]

end

classes_to_index.each_pair do |clazz, attributes|

name = clazz.name.to_s.downcase

next if !filter.nil? && name != filter

puts "Indexing #{name}"

# get the last index cut off

index_metadata = ::IndexMetadata.find_by(name: name)

last_cut_off = index_metadata&.last_index

puts "Last cutoff was #{last_cut_off ? last_cut_off : 'never'}"

db_attributes = attributes.filter { |x| x.is_a? Symbol }

calculated_attributes = attributes.filter { |x| x.is_a? Hash }

if last_cut_off

query = clazz.select(:id, db_attributes).where('updated_at >= ?', last_cut_off)

puts "#{clazz.where('updated_at >= ?', last_cut_off).count} new records found"

else

query = clazz.select(:id, db_attributes)

end

query.find_in_batches(batch_size: BATCH_SIZE) do |group|

to_index = group.map do |x|

next nil if class_filters[clazz].call(x)

atts = x.attributes.dup

atts['id'] = "#{name}-#{x.id}"

atts['kind'] = name

calculated_attributes.each do |c_attrs|

c_attrs.each_pair do |key, value|

if value.is_a? Proc

atts[key] = value.call(x)

end

end

end

next atts.symbolize_keys

end

to_index.compact!

index.add_documents(to_index, 'id') unless to_index.empty?

rescue => exc

puts exc.message

pp to_index

raise

end

if index_metadata

index_metadata.update({ name: name, last_index: cutoff })

else

::IndexMetadata.create({ name: name, last_index: cutoff })

end

end

ensure

$redis.set("mc_index_running", 0)

end

end

Let's break down this task further. MissionControl uses ActiveRecord to access the shared database, so classes like ::User and ::Account are vanilla Rails ActiveRecord classes. On each one of these classes, there are some basic database column-based attributes, like email or name that you can see added to classes_to_index. We also have some "dynamic" attributes we wanted to index. This might be from a relationship of the main object. For example, you might want to index the name of the company a user works for alongside the user, however that piece of information might be stored on the ::Account, which has a one to many relationship with the ::User.

To allow this, we used a mix of symbols for basic attributes, and Lambdas for the dynamic ones:

::User => [

:email,

:name,

:phone_number,

:updated_at,

{ company_name: ->(x) { x.account.name } }

],

Next we need to read all the unindexed objects from the database. To do this, we use a simple class called IndexMetadata. IndexMetadata is stored in the database and consists of the name of the object's class and the timestamp of the last successful index run for that class. Using this, we can ensure that we only read objects that have an updated_at attribute past the last index timestamp.

class AddIndexMetadata < ActiveRecord::Migration[6.1]

def change

create_table :index_metadata do |t|

t.string :name, null: false

t.datetime :last_index, null: true

t.timestamps

end

end

end

IndexMetadata migration

class IndexMetadata < ApplicationRecord

end

models/index_metadata.rb

Now, back to the task code:

index_metadata = ::IndexMetadata.find_by(name: name)

last_cut_off = index_metadata&.last_index

if last_cut_off

query = clazz.select(:id, db_attributes).where('updated_at >= ?', last_cut_off)

puts "#{clazz.where('updated_at >= ?', last_cut_off).count} new records found"

else

query = clazz.select(:id, db_attributes)

end

Now we have an ActiveRecord query which we can run to fetch all the objects we need to index for that class. To speed things up, we use a find_in_batches method to read DB records in batches.

query.find_in_batches(batch_size: BATCH_SIZE) do |group|

# ...

end

Once we have each group, we can iterate through the objects and construct a hash of the object's db columns as well as the dynamic attributes.

query.find_in_batches(batch_size: BATCH_SIZE) do |group|

to_index = group.map do |x|

next nil if class_filters[clazz].call(x)

atts = x.attributes.filter{ |x| x != 'params'}.dup

atts['id'] = "#{name}-#{x.id}"

atts['kind'] = name

calculated_attributes.each do |c_attrs|

c_attrs.each_pair do |key, value|

if value.is_a? Proc

atts[key] = value.call(x)

end

end

end

next atts.symbolize_keys

end

to_index.compact!

index.add_documents(to_index, 'id') unless to_index.empty?

rescue => exc

puts exc.message

pp to_index

raise

end

As you can see we are also filtering out some of the records that we don't want to index. Once this is done, to_index holds an array of hashes that we can simply push to MeiliSearch where it will be indexed. add_documents adds or updates the documents with the same ID so we don't need to worry about new and existing documents running different code.

Now all that's left is to update our IndexMetadata for the class we just indexed so next time we start from where we left off.

if index_metadata

index_metadata.update({ name: name, last_index: cutoff })

else

::IndexMetadata.create({ name: name, last_index: cutoff })

end

Removing deleted object

The next rake task takes care of removing documents from the index where the object has been deleted from the database. This is very easy if you have some sort of soft delete in your application. But without soft deletion, you simply don't have the record in the database anymore and so don't know what to delete in the index. We solved this by comparing the index with the database:

desc "Delete removed docs"

task :purge, [:name] => [:environment] do |t, args|

INDEX_NAME = :searchable

FETCH_LIMIT = 500

index = $ms_client.index(INDEX_NAME)

# fetch all docs from the search index in batches

id_list = {

'user' => [],

'account' => [],

# more classes here

}

puts "Fetching all documents in search index"

offset = 0

loop do

ids = index.documents(limit: FETCH_LIMIT, offset: offset, attributesToRetrieve: 'id')

# parse the IDs

ids.each { |id| parts = id['id'].split('-'); id_list[parts[0]] << parts[1].to_i }

offset = offset + FETCH_LIMIT

break if ids.count < FETCH_LIMIT # finished

end

# for each index fetch all IDs.

def compare(id_list, idx)

puts "Comparing #{id_list[idx].count} #{idx.pluralize} with database"

offset = 0

ids = []

loop do

db_ids = ::ActiveRecord::Base.establish_connection(:main).connection.execute("SELECT id FROM #{idx.pluralize} LIMIT #{offset},#{FETCH_LIMIT}").to_a.flatten

ids << db_ids

offset = offset + FETCH_LIMIT

break if db_ids.count < FETCH_LIMIT # finished

end

ids.flatten!

return id_list[idx] - ids

end

to_delete = []

if args.count == 0

to_delete << compare(id_list, 'user').map { |x| "user-#{x}"}

to_delete << compare(id_list, 'account').map { |x| "account-#{x}"}

# more classes here

else

to_delete << compare(id_list, args[:name]).map { |x| "#{args[:name]}-#{x}"}

end

to_delete.flatten!

puts "Deleting #{to_delete.count} documents from search index"

index.delete_documents(to_delete)

end

This tasks has 4 main steps:

- Fetch the IDs of all documents in the index (this is very quick in MeiliSearch)

- Fetch the IDs of all documents in the database (this can be improved using

VALUESif you use MySQL 8.0.19+) but still very fast and memory efficient. - Compare the ID sets and find the missing ones

- Delete the missing ones from the index

Scheduling

You can now schedule these tasks to run every minute to keep your index up to date. While this will not give you a real-time index, it works for many use cases where you want the search functionality to be separate from your main application.

Searching

Searching the index is the same regardless of your indexing method. There is MeiliSearch documentation for both server and client side search methods depending on your requirements and the client's JS stack.